Aws Owner Not Set on Uploaded File by Rest Api

Every bit developers, nosotros are always trying to optimize everything from how people communicate to how people buy things. The goal is to make humans arguably more productive.

In the spirit of making humans more productive, the software development mural has seen a dramatic rise in the emergence of developer productivity tools.

This is especially true of the software infrastructure space. Innovators are creating solutions that allow developers to focus more on writing actual business organization logic and less on mundane deployment concerns.

The demand to ameliorate developer feel and cut downward costs are some of the core drivers of serverless computing. But what is serverless computing? That's the crux of this guide. It's the 1 question that matters here, and we will get to information technology.

To assist you walk through this guide, I've divided it into ii parts:

- In role one, we are going to start off by learning what serverless is.

- In part two, we volition set a rudimentary serverless REST API with AWS Lambda and API Gateway.

Let's become started already, shall nosotros?

What is Serverless?

To sympathize what serverless is, first we need to understand what servers are and how they had evolved over fourth dimension. Wait, servers?

Yes, when we build software systems, most times we build them for people. For that reason, we make these applications findable on the interwebs.

Making an application discoverable, ideally, entails uploading that application to a special estimator that runs 24/seven and is super fast. This special reckoner is chosen a server.

The Evolution of Servers

2 decades ago, when companies wanted to upload a piece of software they'd built to a server, they'd take to purchase a physical computer, configure the computer, and and then deploy their application to that computer.

In cases where they needed to upload several applications, they'd accept to become and setup multiple servers, too. Everything was done on-premise.

But information technology didn't take long for people to observe the many problems that came with budgeted servers that mode.

There is the trouble of developer productivity: a developer's attention is divided between writing lawmaking and dealing with the infrastructure that serves the code. This issue could easily exist addressed by getting other people to deal with the infrastructure issues – merely this leads to a second problem:

The problem of cost. These people dealing with the infrastructure concerns would have to exist paid, right? In fact, simply having to purchase a server even for seemingly basic examination applications is in itself a costly affair.

Furthermore, if we begin to factor in other elements like scaling a server's computing adequacy when at that place's a fasten in traffic or simply just updating the server'due south Bone and drivers over time...well, you lot'll begin to encounter how exhausting information technology is to keep an in-house server. People craved something better. There was a need.

Amazon responded to that demand when it announced the release of Amazon Web Services (AWS) in 2006. AWS notoriously disrupted the software infrastructure domain. It was a revolutionary shift from traditional servers.

AWS took away the need for organizations to set their in-house server. Instead, organizations and even individuals could only upload their applications to Amazon'south computers over the internet for some fee. And this server-equally-a-service model announced the start of cloud calculating.

What is Cloud Calculating?

Cloud Calculating is fundamentally nearly storing files or executing code (sometimes both) on someone else's reckoner, usually, over a network.

Cloud calculating platforms like AWS, Microsoft Azure, Google Cloud Platform, Heroku, and others be to salve people the stress of having to setup and maintain their ain servers.

What is outstandingly unique about cloud computing is the fact that you could be in Nigeria, and rent a computer that's in the US. You lot can so admission that computer you've rented and do stuff with it over the internet. Essentially, cloud vendors provide us with compute environments to upload and run our custom software.

The environs nosotros get from these providers is something that has progressed, and now exists in different forms.

In cloud computing parlance, we use the term cloud computing models to refer to the unlike environments most cloud vendors offering. Each new model in the cloud computing scene is usually created to enhance developer productivity and shrink infrastructure and labor costs.

For example, when AWS was beginning launched, information technology had the Rubberband Compute Deject (EC2) service. The EC2 is structurally a bare-bones machine. People that pay for EC2 service would take to exercise a lot of configuration like installing an Bone, a database, and maintaining these things for as long as they own the service.

While EC2 offers lots of flexibility (for example, y'all can install whatever Bone you lot want), it also requires lots of effort to work with. EC2 and other services similar information technology across other cloud providers fall under the cloud computing model called Infrastructure as a Service (IaaS).

Not having to purchase a concrete machine and set it up on-premise makes the IaaS model quite superior to the on-premise server model. Simply yet, the fact that owners have to configure and maintain lots of things makes the IaaS model makes it a not so like shooting fish in a barrel model to piece of work with.

The somewhat labor intensive requirements (from the programmer'southward/customer's perspective) of the IaaS model eventually also became a source of concern. To address that, the adjacent cloud calculating model, Platform as a Service (PaaS), was born.

The major problem with IaaS is having to configure and maintain a lot of things. For instance, Os installation, patch upgrades, discovery, and so on.

In the PaaS model, all that is bathetic. A machine in the PaaS model comes pre-installed with an OS, and patch upgrades on the machine amid others are the vendor'south responsibility.

In the PaaS model, developers just deploy their applications, and the cloud providers handle some of the low level stuff. While this model is easier to work with, it also implies less flexibility. Elastic Beanstalk from AWS and Heroku are some of the examples of offerings in this model.

The PaaS model undeniably took away most of the dreary configuration and maintenance tasks. But beyond just the tasks that brand our software available on the cyberspace, people began to recognize some of the limitations of the PaaS model too.

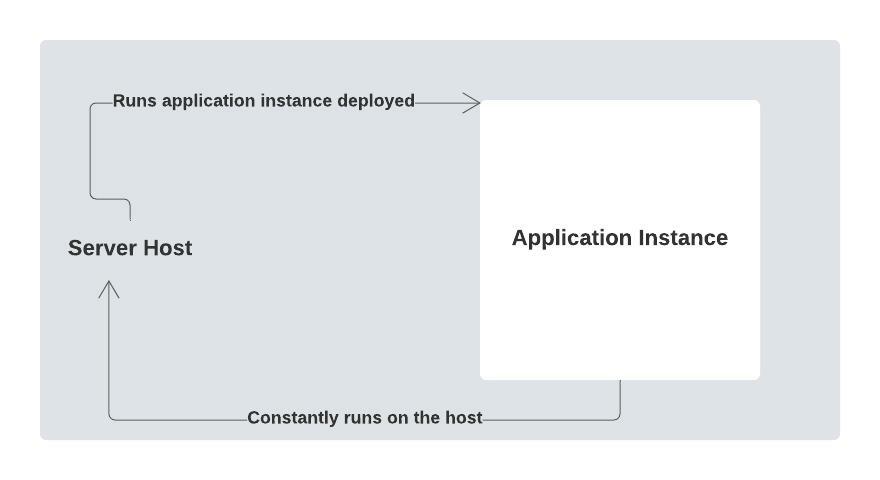

For example, in both the IaaS and PaaS models, developers had to manually deal with scaling upward/down a server's computing adequacy. Additionally, with both the IaaS and PaaS platforms, most vendors charge a apartment fee (Recall Heroku) for their services – it's not based on usage.

In situations where the fee is based on usage, it's unremarkably not very precise. Lastly, in both the IaaS and PaaS models, applications are long-lived (e'er running fifty-fifty when there are no requests coming in). That in turn results in the inefficient apply of server resources.

The above concerns stirred the next evolution of the cloud.

The Ascent of Serverless Computing

Merely like IaaS and PaaS, Serverless is a cloud computing model. It is the nigh contempo evolution of the cloud just after PaaS. Similar IaaS and PaaS, with serverless, you don't have to buy concrete computers.

Furthermore, just as it is in the PaaS model, y'all don't accept to significantly configure and maintain servers. Additionally, the serverless model went a step further: information technology took away the need to manage long lived application instances, and to manually calibration server resource upwardly or down based on traffic. Payment is precisely based on usage, and security concerns are also bathetic.

In the serverless model, you no longer take to worry about anything infrastructure-related because the deject providers handle all that. And this is exactly what the serverless model is all near: allowing developers and whole organizations to focus on the dynamic aspects of their project while leaving all the infrastructure concerns to the cloud provider.

Literally all the infrastructure concerns from setting up and maintaining the server to machine scaling server resources up from and downwardly to zero and security concerns. And in fact one of the killer features of the serverless model is the fact that users are charged precisely based on the number of requests the software they deployed has handled.

Then nosotros can now say that serverless is the term we use to refer to whatever deject solution that takes away all the infrastructure concerns we normally would accept to worry about. For example, AWS Lambda, Azure functions, and others.

We likewise utilise the term serverless to draw applications that are designed to be deployed to or interact with a serverless environment. Hmmm, how so?

Function as a Service vs Backend as a Service

All serverless solutions belong to one of 2 categories:

- Office as a Service (FaaS) and

- Backend as a Service (BaaS).

BaaS, FaaS – Nyior, this is getting quite complicated :-(

I know, merely don't worry – you'll get it <3

A cloud offering is considered a BaaS and by extension serverless if it replaces certain components of our application that we'd normally code or manage ourselves.

For case, when you use Google's Firebase hallmark service or Amazon'due south Cognito service to handle user authentication in your project, and so you've leveraged a BaaS offering.

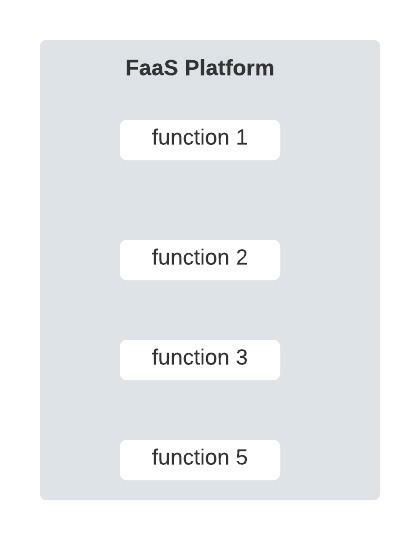

A cloud offer is considered a FaaS and past extension serverless if information technology takes abroad the need to deploy our applications as single instances that are then run every bit processes within a host. Instead, we interruption downward our application into granular functions (with each part ideally encapsulating the logic of a single operation). Each function is and so deployed to the FaaS platform.

Manner besides abstract? Okay, see the image below:

From the image above you tin see that FaaS platforms offer an entirely different way of deploying applications. We've seen zero like it before: at that place are no hosts and awarding processes. As a issue, we don't have some lawmaking that's constantly running and listening for requests.

Instead, nosotros have functions that but run when invoked, and they're being torn down as presently as they're done processing the chore they were called upon to perform.

If these functions aren't always running and listening for requests, how then are they invoked, you might ask?

All FaaS platforms are consequence-driven. Essentially, every part we deploy is mapped to some event. And when that event occurs, the function is triggered.

Summarily, we use the term serverless to describe a Part every bit a Service or Backend as Service cloud solution where:

- We don't have to manage long lived application instances or hosts for applications we deploy.

- We don't have to manually scale up/downward calculating resources depending on traffic because the server automatically does that for us.

- The pricing is precisely based on usage

Also, any application that'southward built on a significant number of BaaS solutions or designed to be deployed to a FaaS platform or even both can also be considered serverless.

Now that nosotros're fully grounded in what serverless is, let's see how we can prepare a minimal serverless Residuum API with AWS Lambda in tandem with AWS API Gateway.

Serverless Instance Project

Here, we will exist setting up a minimal, perhaps uninteresting serverless REST API with AWS lambda and API Gateway.

AWS Lambda, API Gateway – What are these things please?

They're serverless deject solutions. Retrieve we stated that all serverless cloud solutions belong to one of two categories: BaaS and FaaS. AWS Lambda is a Part every bit a Service platform and API Gateway is a Backend as a Service solution.

How is API Gateway a Backend every bit a Service platform, you might ask?

Well, normally we implement routing in our applications ourselves. With API Gateway, we don't have to do that – instead, we cede the routing chore to API Gateway.

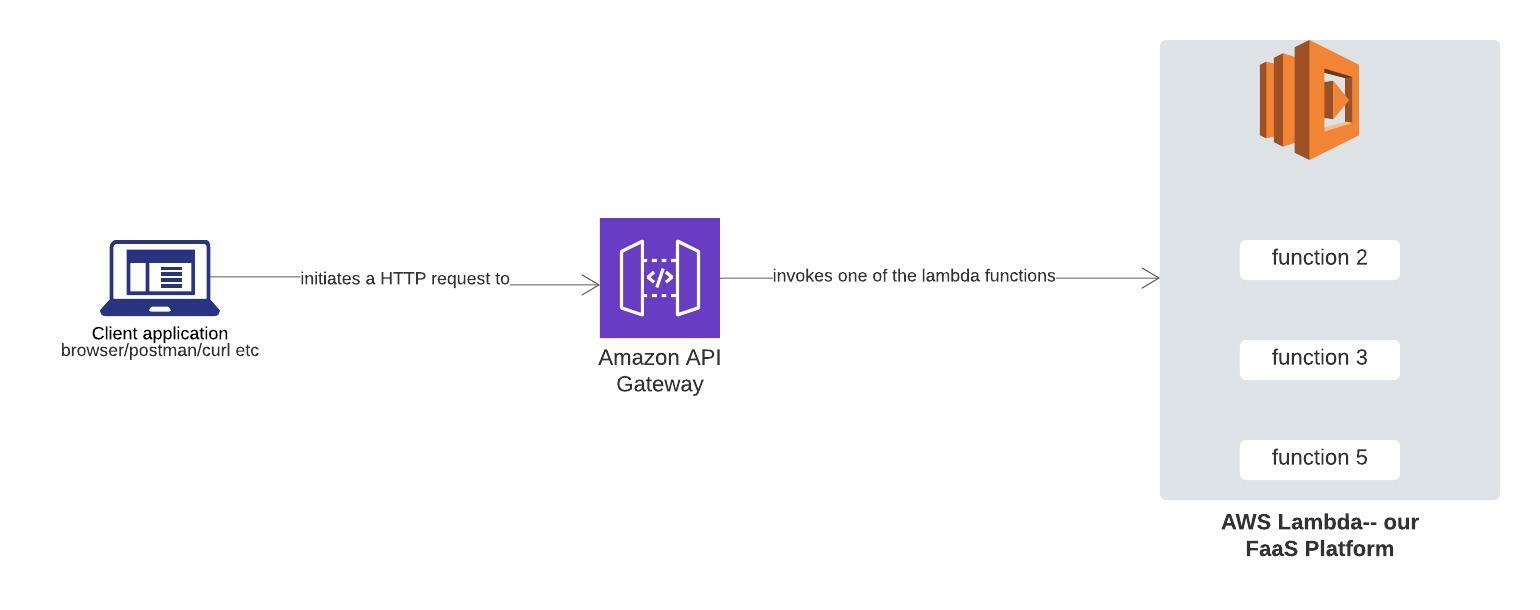

AWS Lambda, API Gateway, Our Application – How are all these connected?

The connection is simple. AWS Lambda is where we volition be deploying our actual application code. But because AWS Lambda is a FaaS platform, we are going to suspension our application into granular functions, with each function handling a single operation. Nosotros'll then deploy each function to AWS Lambda.

Oh okay, merely where does API Gateway come in?

AWS Lambda, like all other FaaS platforms, is upshot-driven. What that means is, when you deploy a part to the platform, that function but does something when some outcome it'south tied to happens.

An result could be anything from an HTTP asking to a file existence uploaded to s3.

In our case, we will exist deploying a minimal Remainder API backend. Because we are going serverless and more specifically the FaaS style, we are going to break downward our Residual backend into independent functions. Each office will be tied to some HTTP request.

Really, we volition simply be writing one function.

API Gateway is the tool that nosotros will utilize to necktie a request to a function we've deployed. So when that particular asking comes in, the function is invoked.

Think of API Gateway as routing-as-a-service-tool :-). The image below depicts the relationship between the higher up entities.

Ok, I get the connection. What'due south side by side?

Let's Configure Stuff on AWS xD

The master task here is to build a minimal paraphrasing tool. We are going to create a Residuum endpoint that accepts a POST request with some text as the payload. Our endpoint will then return a paraphrased version of that text equally the response.

To accomplish that, nosotros will code a lambda office that does the actual paraphrasing of text blocks. We will and then connect our function to the API gateway, just so whenever there is a POST request, our part volition be triggered.

Only first, there are sure things we need to configure. Follow the steps in the following sections to configure all that yous'd need to complete the task in this function.

Note: Some of the steps in the subsequent sections were adapted directly from AWS' tutorial on serverless.

Step 1: Create an AWS Account

To create an account on AWS, follow the steps in module 1 of this guide.

Step 2: Code our Lambda Function on AWS

Think, nosotros are going to need a function that does the actual text paraphrasing, and this is where nosotros do that. Follow the steps below to create the lambda office:

- Login to your AWS account using the credentials in step 1. In the search field, input 'lambda', and so select Lambda from the list of services displayed.

- Click the create role button on the Lambda folio.

- Go on the default Author from scratch menu selected.

- Enter paraphrase_text in the Name field.

- Select Python 3.9 for the Runtime.

- Leave all the other default settings equally they are and click on create office.

- Roll down to the Office lawmaking section and replace the exiting code in the lamda_function.py lawmaking editor with the code below:

import http.customer def lambda_handler(outcome, context): # TODO implement conn = http.client.HTTPSConnection("paraphrasing-tool1.p.rapidapi.com") payload = event['body'] headers = { 'content-type': "awarding/json", 'x-rapidapi-host': "paraphrasing-tool1.p.rapidapi.com", 'x-rapidapi-fundamental': "your api fundamental here" } conn.request("POST", "/api/rewrite", payload, headers) res = conn.getresponse() data = res.read() return { 'statusCode': 200, 'torso': data } Nosotros are using this API for the paraphrasing functionality. Head over to that folio, subscribe to the basic plan, and take hold of the API key (it's free).

Step 3: Test our Lambda Function on AWS

Here, we'll test our lambda function with a sample input to see that it produces the expected beliefs: paraphrasing whatever text it is passed.

To test your merely created lambda office, follow the following steps:

- From the chief edit screen for your function, select Configure exam result from the Test dropdown.

- Keep Create new examination upshot selected.

- Enter TestRequestEvent in the Event name field

- Re-create and paste the following test event into the editor:

{ "path": "/paraphrase", "httpMethod": "Mail", "headers": { "Accept": "*/*", "content-type": "application/json; charset=UTF-8" }, "queryStringParameters": null, "pathParameters": null, "torso": "{\r\"sourceText\": \"The bone of contention right now is how to brand plenty coin. \"\r\r\north }" } You lot tin supercede the body text with whatever content you want. Once you're washed pasting the above lawmaking, go along with the following steps:

- Click create.

- On the main part edit screen, click Examination with TestRequestEvent selected in the dropdown.

- Ringlet to the top of the page and expand the Details section of the Execution issue section.

- Verify that the execution succeeded and that the office upshot looks like the following:

Response { "statusCode": 200, "body": "{\"newText\":\"The stumbling block right now is how to make big bucks.\"}" } As seen to a higher place, the original text nosotros passed our lambda function has been paraphrased.

Step 3: Exposing our Lambda Function Via the API Gateway.

At present that we've coded our lambda part and it works, here, we'll expose the function through a Residual endpoint that accepts a Mail service asking. Once a request is sent to that endpoint, our lambda office will be called.

Follow the steps below to betrayal your lambda function via the API Gateway:

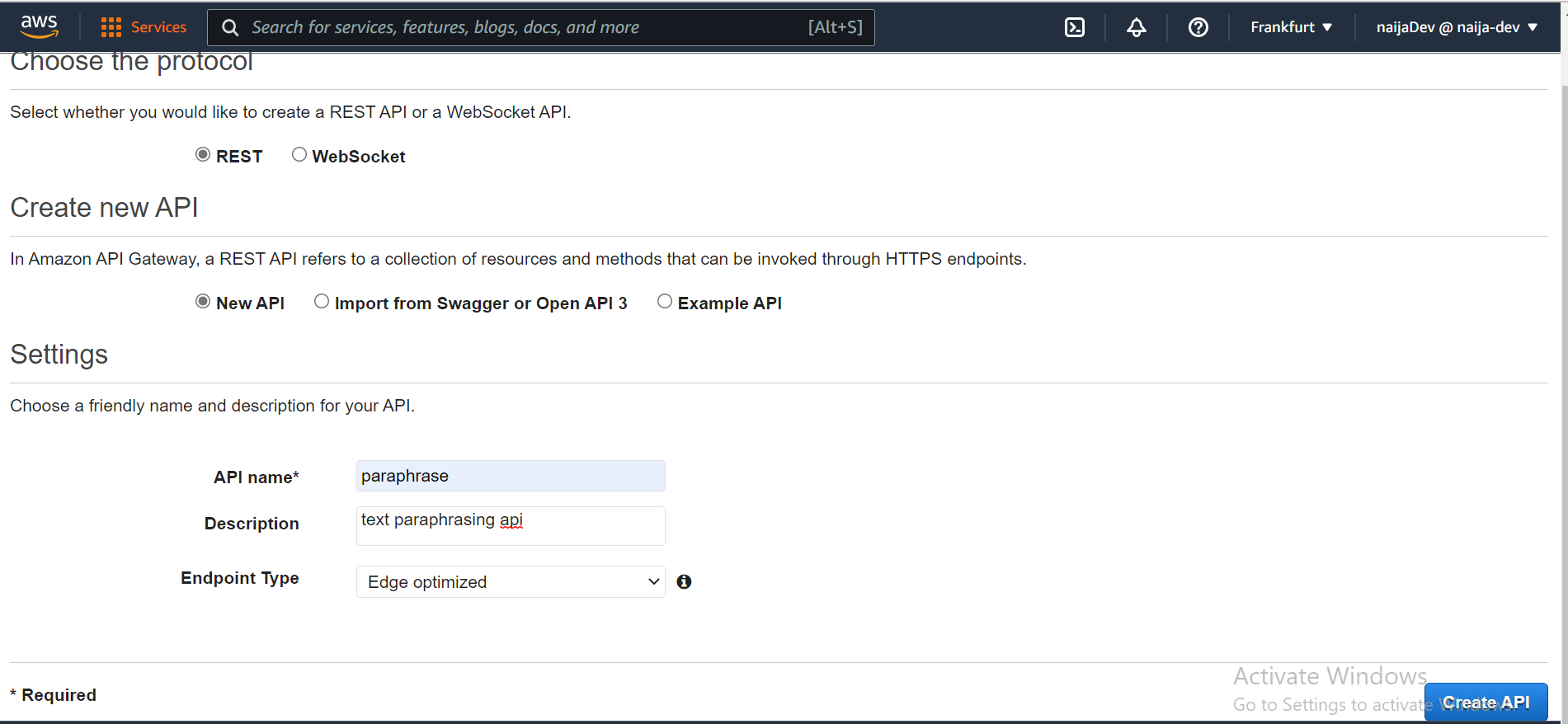

Create the API

- In the search field, search and select API Gateway

- On the API Gateway page, there are four cards nether the choose an API blazon heading. Go to the Balance API carte du jour and click build.

- Next, provide all the required information as shown in the paradigm below and click Create API.

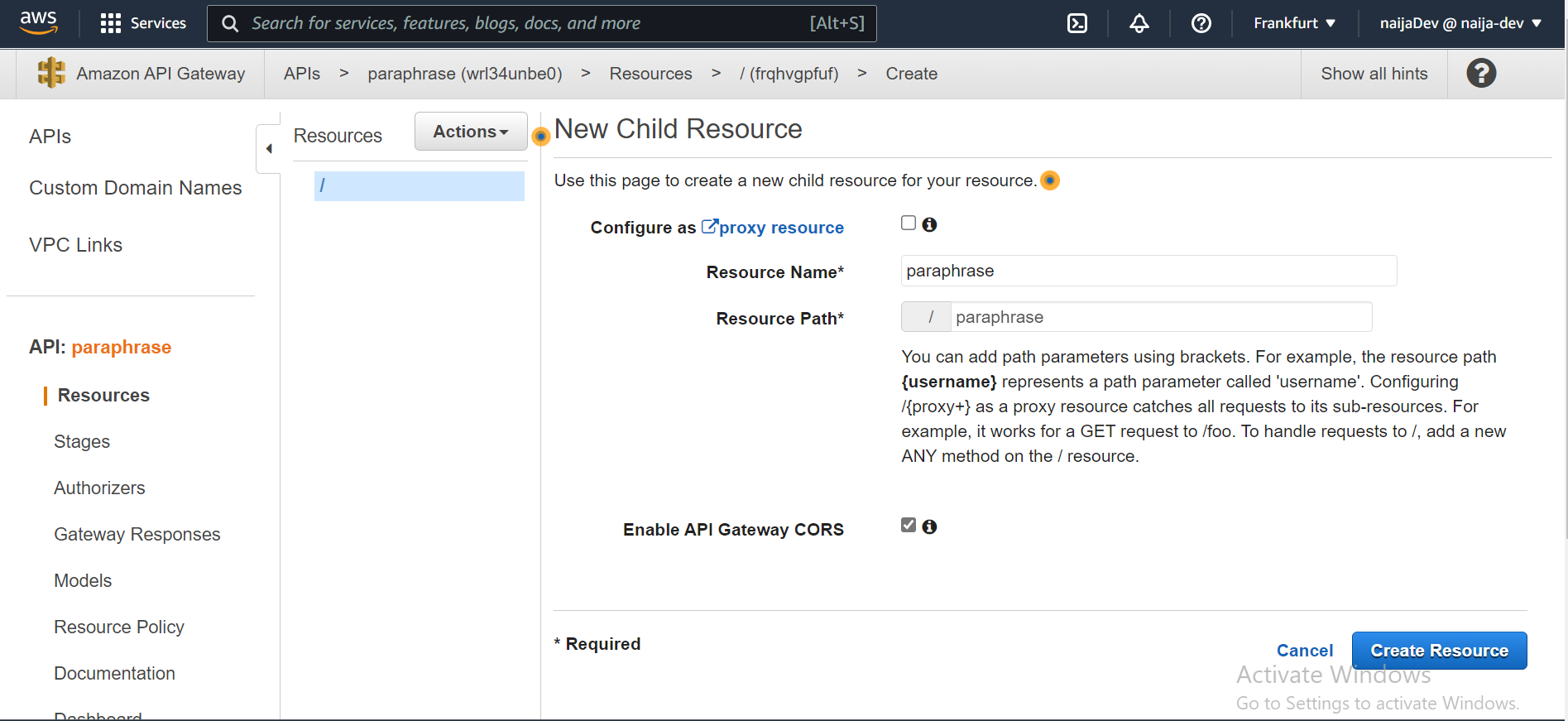

Create the Resource and Method

Resource, Method? In the steps above nosotros created an API. Simply an API unremarkably has endpoint(s). An endpoint usually specifies a path and the HTTP method it supports. For instance Become /get-user. Hither, we call the path resource, and the HTTP verb tied to a path a method. Thus, resource + method = REST endpoint.

Here, we are going to create one Remainder endpoint that will let users laissez passer a text block to be paraphrased to our lambda office. Follow the steps below to accomplish that:

- Beginning from the Deportment dropdown select Create Resources. Adjacent, fill up the input fields and tick the bank check-box equally shown in the image beneath and click Create Resources.

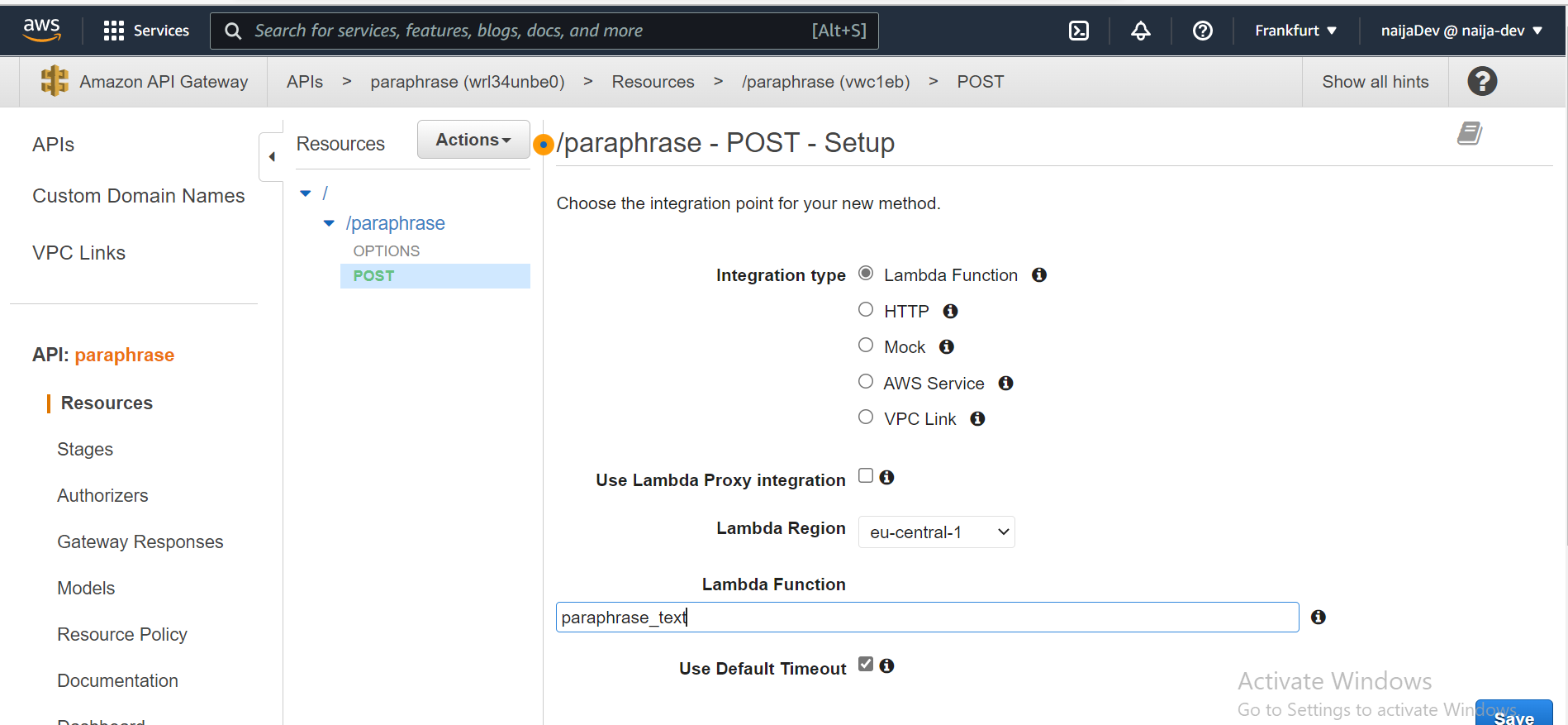

- With the newly created /paraphrase resource selected, from the Action dropdown select Create Method.

- Select POST from the new dropdown that appears, so click the checkmark.

- Provide all the other info shown in the epitome below and click relieve.

Deploy the API

- In the Actions driblet-down listing, select Deploy API.

- Select [New Stage] in the Deployment phase drop-down list.

- Enter production or whatever yous wish for the Phase Name.

- Choose Deploy.

- Notation the invoke URL is your API's base URL. It should look something like this: https://wrl34unbe0.execute-api.eu-primal-1.amazonaws.com/{stage proper noun}

- To test your endpoint, you tin can use postman or roll. Append the path to your endpoint to the end of the invoke URL like so: https://wrl34unbe0.execute-api.eu-primal-1.amazonaws.com/{stage name}/paraphrase. And of course the request method should exist POST.

- When testing, besides add the expected payload to the request like so:

{ "sourceText": "The os of contention right now is how to make enough money. } Wrapping Things up

Well that'south it. Beginning we learned all about the term serverless and so we went on to prepare a light weight serverless REST API with AWS Lambda and API Gateway.

Serverless doesn't imply the total absence of servers, though. It is substantially about having a deployment catamenia where you don't have to worry nearly servers. The servers are however present, but they are being taken intendance of by the cloud provider.

We are going serverless each time if we build a significant components of our awarding on top of BaaS technologies or whenever we construction our awarding to be compatible with whatever FaaS platform (or when we do both).

Cheers for reading to this point. Want to connect? You can observe me on Twitter, LinkedIn, or GitHub.

References

What Is Serverless?

Although the deject has revolutionized the way nosotros manage applications, many companies nonetheless view their systems in terms of servers—even though they no longer piece of work with physical servers. What if … - Selection from What Is Serverless? [Book]

Mike Roberts, John Chapin O'Reilly Online Learning

Mike Roberts, John Chapin O'Reilly Online Learning

Cover Paradigm: hestabit.com

Learn to code for free. freeCodeCamp's open up source curriculum has helped more than than twoscore,000 people get jobs as developers. Get started

workmanplaragnight.blogspot.com

Source: https://www.freecodecamp.org/news/how-to-setup-a-basic-serverless-backend-with-aws-lambda-and-api-gateway/

0 Response to "Aws Owner Not Set on Uploaded File by Rest Api"

Postar um comentário